Case Study - Training Custom Language Models for Resource-Constrained Environments

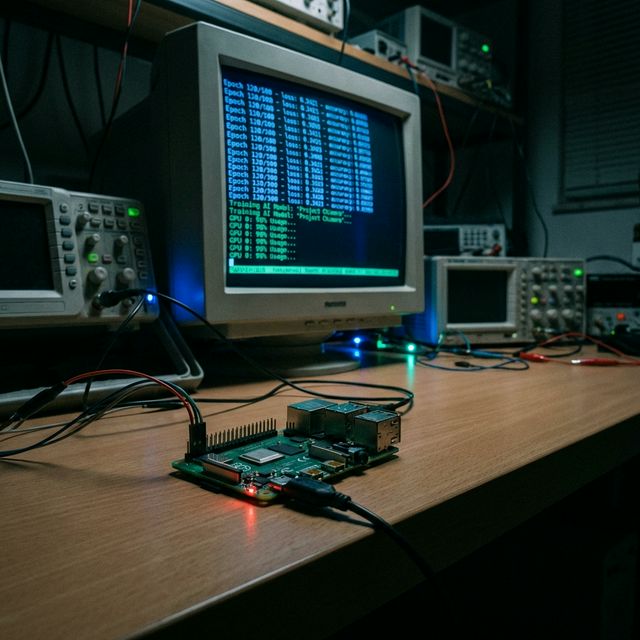

An internal research initiative exploring how to train and deploy intelligent language models on minimal hardware — from Raspberry Pi to microcontrollers.

- Client

- Starmind Research

- Year

- Service

- Internal R&D, Edge AI

Why This Matters

The AI industry is overwhelmingly focused on scale — larger models, more GPUs, bigger clusters. But a growing number of production environments cannot use cloud-based AI:

- Air-gapped defense networks with no external connectivity

- HIPAA-regulated facilities where data cannot leave the premises

- Embedded systems in manufacturing, robotics, and IoT

- Edge deployments in remote or bandwidth-constrained locations

These environments need models that are small enough to run locally, fast enough to be useful, and capable enough to solve real problems. Starmind exists to prove this is achievable.

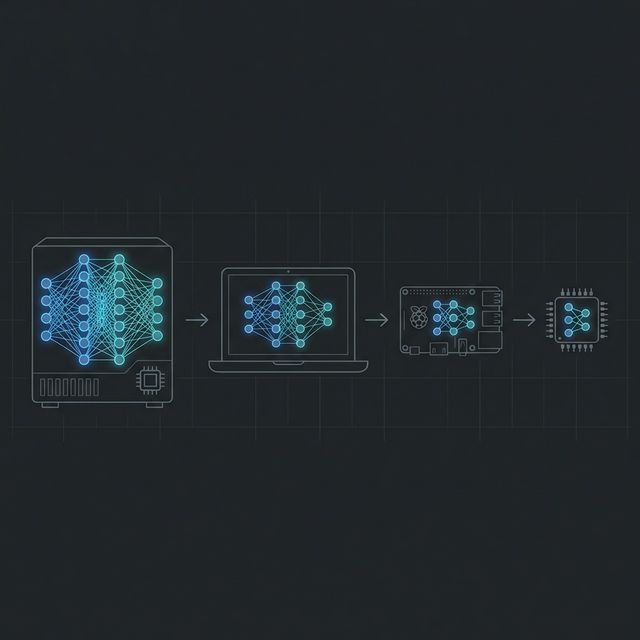

The Research Program

Starmind operates as an open research initiative. All code, datasets, model weights, and training budgets are shared publicly. The program has been supported by compute grants from Prime Intellect, CloudRift, Inflection Grants, AWS Bedrock, and Thinking Machines.

- Model Pre-training

- Fine-tuning

- Quantization

- Architecture Search

- Edge Deployment

- Synthetic Data Generation

The research is structured as a series of experiments, each targeting a specific capability or hardware constraint.

Experiment: Language Models on Microcontrollers

One of the most extreme experiments trained a transformer-based language model to run on the RP2040 — a microcontroller with just 264KB of SRAM and no floating-point unit.

The findings were significant:

- 176 architectural variants were tested across 1K–10K parameter models

- KV caching provided 3–7x speed improvement for multi-token generation

- 1K parameter models achieved 20–32 tokens per second — real-time capable

- 8K parameter models hit 2–5 tokens per second — the best balance of capability and reliability

Key insight: dimension size has the highest impact on inference speed (40–50% loss per doubling), while vocabulary size has minimal impact (8–12% per doubling). This inverts conventional wisdom about model scaling.

Production-Ready Architecture Templates

The research produced two reference architectures for microcontroller deployment:

- Maximum Speed (1K params): Ultra-narrow dimensions, 64–128x FFN ratio, single layer, 20–32 tok/s

- Balanced Production (8K params): 256–512 vocabulary, 2–3 layers, 8 attention heads, 2–5 tok/s

These findings directly inform how we approach on-premise and air-gapped AI deployments for clients.

Experiment: Domain-Specific 3D Generation

CadMonkey is a small language model trained to generate 3D files in OpenSCAD — a parametric, code-based 3D modeling language used in mechanical engineering.

The model was fine-tuned from Gemma3-1B using synthetic datasets generated through a multi-stage pipeline:

- Base dataset curation from 7,000 open-source OpenSCAD files

- Synthetic data generation using frontier models with cost optimization

- Horizontal scaling — expanding object variety across categories

- Vertical scaling — increasing complexity per object

The entire experiment ran over three weekends using approximately $500 in compute credits. The resulting model generates functional 3D files from natural language prompts and runs on consumer hardware.

What This Means for Production

Starmind is not a product. It is a research program that informs how BeeNex approaches constrained-environment deployments.

When a client needs AI that runs on-premise, in an air-gapped network, or on embedded hardware, the engineering decisions are informed by direct experience — not theoretical knowledge.

- Architecture variants tested

- 176

- Minimum viable SRAM

- 264KB

- Total compute for CadMonkey

- $500

- Peak microcontroller speed

- 32 tok/s

The gap between AI research and AI deployment is an engineering problem. Starmind is where we study the hardest edge of that problem — so that production deployments are built on tested foundations, not assumptions.

Research Sponsors

Starmind's experiments are made possible by compute grants and partnerships with organizations committed to advancing accessible AI research.

Explore the full research at starmind.comfyspace.tech.